DeepVariant

I joined the DeepVariant team at Google in 2019 and stayed for 4 years until 2023.

Visualizations

The first thing I did on the DeepVariant team was try to understand how DeepVariant works for myself. This resulted ultimately in this blog post: Looking Through DeepVariant’s Eyes and building a visualization tool for the pileup images into DeepVariant, called show_examples.

This work made everyone on the team much more familiar with these pileup images, especially because I made everyone play the “can you beat DeepVariant?” game and kept showing pileup images in my presentations — see an external example in the video below. For me, this led to my experiments with knocking out channels and with alt-aligned pileups, but I think it also influenced other team members to start experimenting more with those pileup images, paving the way for the HP channel,

I also created the VCF stats report, which includes a series of charts summarizing variant statistics from any VCF.

Conference talk

Here is a talk I gave in 2020 on DeepVariant:

This video also shows some interpretability work I did by passing various pileup images to the model and inspecting intermediate layers in the model.

Other interpretability experiments were only presented internally:

Knocking out each of the 6 channels in the pileup image, training a model for each, and seeing how the accuracy was affected. The worst accuracy drop was for the “read supports variant” channel for indels, which was because all the sites with more than one potential ALT have multiple pileup images, and that channel was the only one to distinguish which of the multiple ALTs the model was supposed to predict. This knockout was rescued by the alt-aligned pileup method, which gave the model another way to see which ALT it was supposed to call. The team later published a blog post based on continuation of this work.

Passing fake pileup images to the model, e.g. faking very bad mapping quality in an otherwise normal pileup image, and seeing how the model’s output changed. These were largely as expected, e.g. zeroing out a quality channel made the model predict 0, meaning “not a variant”.

Alt-aligned pileup method led to accuracy improvements

I conceived and built the alt-aligned pileup method, whereby DeepVariant re-aligns reads to the proposed alternate allele and includes that view in its pileup images.

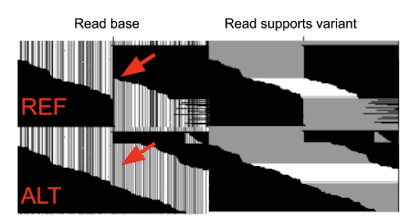

In this image, you can see an example of why the model gets more information: Several reads that are grey in the “Read supports variant” channel are actually mapping into insertion ALT sequence but are not shown in white as supporting the variant because they do not span the entire variant.

Alt-aligned pileups reduced indel errors by 24% for the PacBio model (quoted from this other blog post).

This alt-aligned pileup work is covered in the video above in more detail.

PrecisionFDA Truth Challenge V2

In 2020, we participated in the PrecisionFDA Truth Challenge V2 (paper). In the paper, our work is included under “The Genomics Team in Google Health” and “The UCSC CGL and Google Health”, as well as “PEPPER-DV” (DV is DeepVariant).

I added a hybrid Illumina/PacBio model to DeepVariant during the competition, which tied for first place with the highest overall accuracy in the multi-technology category. Also, the alt-aligned pileups work contributed to winning both the PacBio and multi-technology categories for the “all genomic regions” benchmark.

As an aside, it was really fun to be part of this competition with my team, and being in the summer of 2020, it brought us together during the hardest time of the pandemic.

N-sample DeepVariant

In 2021, I led a major refactor of DeepVariant and DeepTrio, generalizing the make-examples code to support N samples instead of separate code for the 1-sample (DeepVariant) and 3-sample (DeepTrio) architectures. See release notes. I included a generalized multi-sample prototype of make-examples that later enabled much faster experimentation and development of DeepSomatic, which uses 2 samples (tumor/normal). This refactoring also stopped DeepTrio’s codebase from diverging, giving it instantly the benefits of improvements like using BAM HP tags that had been added to DeepVariant since DeepTrio’s creation, while also making it much more maintainable.

Mentoring

I hosted/co-hosted two interns:

- Hojae Lee, 2020, worked on interpretability methods like saliency.

- Kishwar Shafin, 2021, worked on something that I think is still a secret… but then he joined the team full time after finishing his PhD.